Bryan de Oliveira

Hi! I'm Bryan, a 28-year-old AI researcher based in Goiânia, Brazil. I recently completed my Master's degree in Computer Science from the Federal University of Goiás, specializing in Deep Reinforcement Learning with a publication at ICML 2025. I am actively seeking PhD opportunities to advance cutting-edge research in AI and RL towards developing generalist agents that learn from experience.

Hi! I'm Bryan, a 28-year-old AI researcher based in Goiânia, Brazil. I recently completed my Master's degree in Computer Science from the Federal University of Goiás, specializing in Deep Reinforcement Learning with a publication at ICML 2025. I am actively seeking PhD opportunities to advance cutting-edge research in AI and RL towards developing generalist agents that learn from experience.

I currently work at the Center of Excellence in Artificial Intelligence (CEIA) and the Advanced Knowledge Center for Immersive Technologies (AKCIT), where I lead interdisciplinary teams on cutting-edge AI R&D projects. My current work focuses on LLM training and evaluation through reinforcement learning, emphasizing reasoning, tool use, information-seeking behavior, interpretability, and steerability. I also explore LLM-based multi-agent systems, investigating evolutionary search, computer use, and implicit feedback mechanisms. My approach combines theoretical advances in Deep RL with practical applications, bridging the gap between academic innovation and real-world deployment.

My research expertise spans Deep Reinforcement Learning, with particular focus on representation learning, model-based RL, and industry applications in pricing optimization and recommender systems. I have proven ability to structure and execute complex R&D projects from conception to publication, complemented by extensive engineering experience in developing and deploying ML systems. This background provides me with unique insights into both the theoretical foundations and practical constraints of AI systems.

I'm deeply interested in the fundamental questions of artificial intelligence, neuroscience, and their interconnections, particularly in how we can develop AI systems that learn better representations and leverage world models to effectively plan and adapt. I believe in a principled, interdisciplinary approach to AI research that combines theory with rigorous empirical validation and real-world impact.

Sliding Puzzles Gym: A Scalable Benchmark for State Representation in Visual Reinforcement Learning

ICML 2025 Poster · May 2025SPGym extends the 8-tile puzzle to evaluate RL agents by scaling representation learning complexity while keeping environment dynamics fixed, revealing opportunities for advancing representation learning for decision-making research. Read more

Reinforcement Learning for Debt Pricing: A Case Study in Financial Services

Workshop on RL4RS @ RLC 2025 · June 2025Offline RL with LTV-based rewards and bandit orchestration at a large financial institution improved collection values. Read more

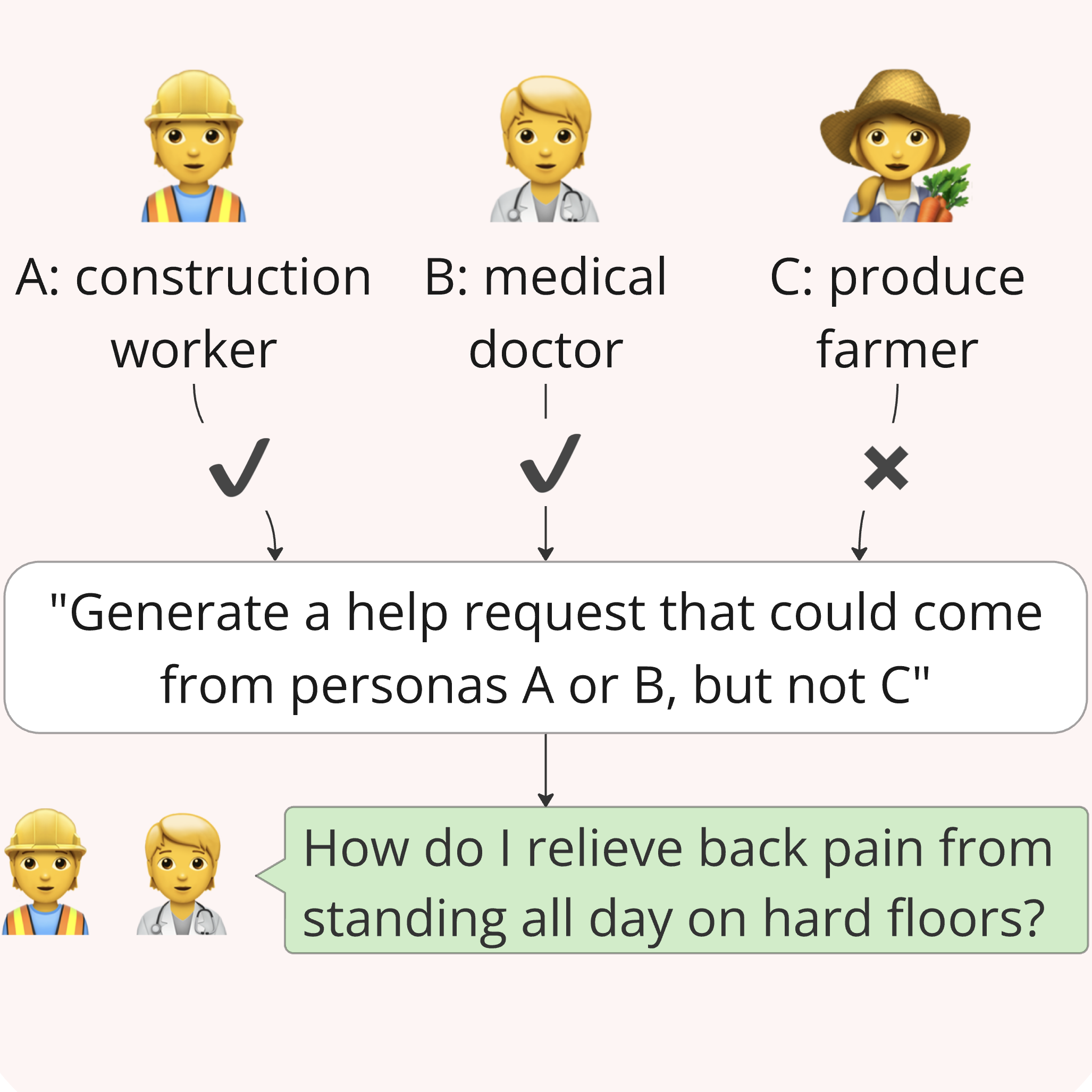

InfoQuest: Evaluating Multi-Turn Dialogue Agents for Open-Ended Conversations with Hidden Context

Workshop on SSI-FM @ ICLR 2025 · March 2025A benchmark for evaluating how LLMs handle ambiguous open-ended requests through dialogue, revealing that current models struggle to ask effective clarifying questions. Read more

PulseRL: Enabling Offline Reinforcement Learning for Digital Marketing Systems via Conservative Q-Learning

Workshop on Offline RL @ NeurIPS 2021 · October 2021PulseRL is an offline reinforcement learning system for optimizing communication channels in Digital Marketing Systems (DMS) using Conservative Q-Learning (CQL). It learns from historical data, avoiding costly interactions, and reduces bias from out-of-distribution actions. PulseRL outperformed RL baselines in real-world DMS experiments, proving its effectiveness at scale. Read more