Reinforcement Learning for Debt Pricing: A Case Study in Financial Services

Offline RL with LTV-based rewards and bandit orchestration at a large financial institution improved collection values.

Abstract

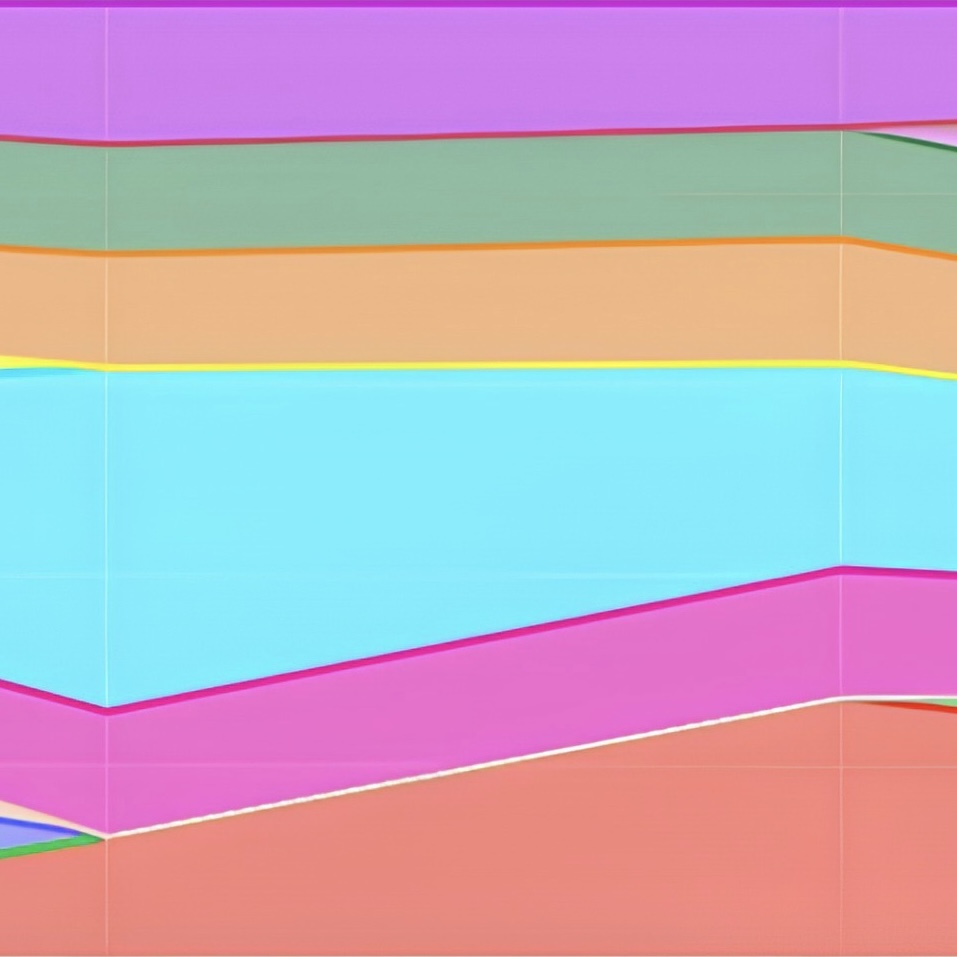

Traditional static discount policies in debt recovery often fail to adapt to diverse debtor behaviors and evolving market dynamics. This research developed, evaluated, and deployed a comprehensive reinforcement learning (RL) system for optimizing discount policies to maximize recovered debt and minimize negotiation costs within a large financial institution. Our methodology encompassed developing sophisticated lifetime value (LTV) models as dynamic reward functions, implementing multi-armed bandit (MAB) meta-policies for autonomous policy evaluation and selection, and exploring diverse RL approaches including Imitation Learning and Offline RL. Key findings demonstrate superior performance of RL-driven discount policies, achieving lower average discounts and higher collection values in production compared to established baselines. The LTV models proved crucial for handling delayed feedback, while MAB meta-policies effectively orchestrated policy deployment in live operational settings. This work demonstrates the practical viability of applying advanced RL techniques to real-world financial challenges.

Presented at the Workshop on Practical Insights into Reinforcement Learning for Real Systems at the 2nd Reinforcement Learning Conference (RLC).